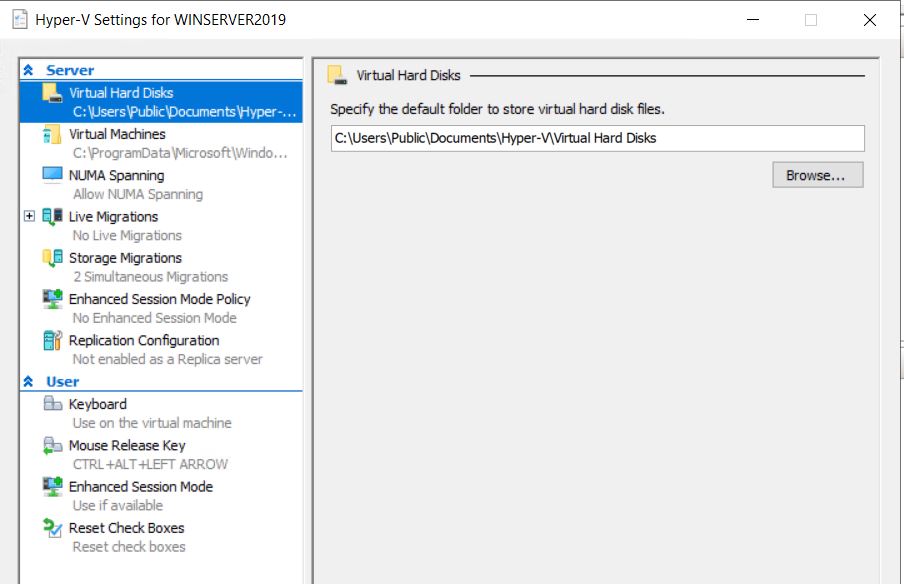

Requires applications that can leverage DAX (Hyper-V, SQL Server). Using persistent memory in a Hyper-V virtual machine. In a previous article “Configure NVDIMM-N on a DELL PowerEdge R740 with Windows Server 2019”, I showed you how to set up persistent memory for use on Windows Server 2019. When trying to Enabling Physical GPUs in Hyper-V, the option is not there in the GUI. In Windows 2016 there is an option as in the below screenshot: In Windows Server 2019, here is the screenshot and there is no option to enable it: So, Here is how to enable it in Windows server 2019. You can also use RemoteFX vGPU feature on Windows Server 2019.

Applies to: Microsoft Hyper-V Server 2016, Windows Server 2016, Windows Server 2019, Microsoft Hyper-V Server 2019

Starting with Windows Server 2016, you can use Discrete Device Assignment, or DDA, to pass an entire PCIe Device into a VM. This will allow high performance access to devices like NVMe storage or Graphics Cards from within a VM while being able to leverage the devices native drivers. Please visit the Plan for Deploying Devices using Discrete Device Assignment for more details on which devices work, what are the possible security implications, etc.

There are three steps to using a device with Discrete Device Assignment:

- Configure the VM for Discrete Device Assignment

- Dismount the Device from the Host Partition

- Assigning the Device to the Guest VM

All command can be executed on the Host on a Windows PowerShell console as an Administrator.

Configure the VM for DDA

Discrete Device Assignment imposes some restrictions to the VMs and the following step needs to be taken.

- Configure the “Automatic Stop Action” of a VM to TurnOff by executing

Some Additional VM preparation is required for Graphics Devices

Some hardware performs better if the VM in configured in a certain way. For details on whether or not you need the following configurations for your hardware, please reach out to the hardware vendor. Additional details can be found on Plan for Deploying Devices using Discrete Device Assignment and on this blog post.

- Enable Write-Combining on the CPU

- Configure the 32 bit MMIO space

- Configure greater than 32 bit MMIO space

Tip

The MMIO space values above are reasonable values to set for experimenting with a single GPU. If after starting the VM, the device is reporting an error relating to not enough resources, you'll likely need to modify these values. Consult Plan for Deploying Devices using Discrete Device Assignment to learn how to precisely calculate MMIO requirements.

Dismount the Device from the Host Partition

Optional - Install the Partitioning Driver

Discrete Device Assignment provide hardware venders the ability to provide a security mitigation driver with their devices. Note that this driver is not the same as the device driver that will be installed in the guest VM. It's up to the hardware vendor's discretion to provide this driver, however, if they do provide it, please install it prior to dismounting the device from the host partition. Please reach out to the hardware vendor for more information on if they have a mitigation driver

If no Partitioning driver is provided, during dismount you must use the -force option to bypass the security warning. Please read more about the security implications of doing this on Plan for Deploying Devices using Discrete Device Assignment.

Locating the Device's Location Path

The PCI Location path is required to dismount and mount the device from the Host. An example location path looks like the following: 'PCIROOT(20)#PCI(0300)#PCI(0000)#PCI(0800)#PCI(0000)'. More details on located the Location Path can be found here: Plan for Deploying Devices using Discrete Device Assignment.

Disable the Device

Using Device Manager or PowerShell, ensure the device is “disabled.”

Dismount the Device

Depending on if the vendor provided a mitigation driver, you'll either need to use the “-force” option or not.

- If a Mitigation Driver was installed

- If a Mitigation Driver was not installed

Assigning the Device to the Guest VM

The final step is to tell Hyper-V that a VM should have access to the device. In addition to the location path found above, you'll need to know the name of the vm.

What's Next

After a device is successfully mounted in a VM, you're now able to start that VM and interact with the device as you normally would if you were running on a bare metal system. This means that you're now able to install the Hardware Vendor's drivers in the VM and applications will be able to see that hardware present. You can verify this by opening device manager in the Guest VM and seeing that the hardware now shows up.

Removing a Device and Returning it to the Host

If you want to return he device back to its original state, you will need to stop the VM and issue the following:

You can then re-enable the device in device manager and the host operating system will be able to interact with the device again.

Example

Mounting a GPU to a VM

In this example we use PowerShell to configure a VM named “ddatest1” to take the first GPU available by the manufacturer NVIDIA and assign it into the VM.

Troubleshooting

Applies to: Microsoft Hyper-V Server 2016, Windows Server 2016, Windows Server 2019, Microsoft Hyper-V Server 2019

Starting with Windows Server 2016, you can use Discrete Device Assignment, or DDA, to pass an entire PCIe Device into a VM. This will allow high performance access to devices like NVMe storage or Graphics Cards from within a VM while being able to leverage the devices native drivers. Please visit the Plan for Deploying Devices using Discrete Device Assignment for more details on which devices work, what are the possible security implications, etc.

There are three steps to using a device with Discrete Device Assignment:

- Configure the VM for Discrete Device Assignment

- Dismount the Device from the Host Partition

- Assigning the Device to the Guest VM

All command can be executed on the Host on a Windows PowerShell console as an Administrator.

Configure the VM for DDA

Discrete Device Assignment imposes some restrictions to the VMs and the following step needs to be taken.

- Configure the “Automatic Stop Action” of a VM to TurnOff by executing

Some Additional VM preparation is required for Graphics Devices

Some hardware performs better if the VM in configured in a certain way. For details on whether or not you need the following configurations for your hardware, please reach out to the hardware vendor. Additional details can be found on Plan for Deploying Devices using Discrete Device Assignment and on this blog post.

- Enable Write-Combining on the CPU

- Configure the 32 bit MMIO space

- Configure greater than 32 bit MMIO space

Tip

The MMIO space values above are reasonable values to set for experimenting with a single GPU. If after starting the VM, the device is reporting an error relating to not enough resources, you'll likely need to modify these values. Consult Plan for Deploying Devices using Discrete Device Assignment to learn how to precisely calculate MMIO requirements.

Dismount the Device from the Host Partition

Optional - Install the Partitioning Driver

Discrete Device Assignment provide hardware venders the ability to provide a security mitigation driver with their devices. Note that this driver is not the same as the device driver that will be installed in the guest VM. It's up to the hardware vendor's discretion to provide this driver, however, if they do provide it, please install it prior to dismounting the device from the host partition. Please reach out to the hardware vendor for more information on if they have a mitigation driver

If no Partitioning driver is provided, during dismount you must use the -force option to bypass the security warning. Please read more about the security implications of doing this on Plan for Deploying Devices using Discrete Device Assignment.

Locating the Device's Location Path

The PCI Location path is required to dismount and mount the device from the Host. An example location path looks like the following: 'PCIROOT(20)#PCI(0300)#PCI(0000)#PCI(0800)#PCI(0000)'. More details on located the Location Path can be found here: Plan for Deploying Devices using Discrete Device Assignment.

Disable the Device

Using Device Manager or PowerShell, ensure the device is “disabled.”

Dismount the Device

Depending on if the vendor provided a mitigation driver, you'll either need to use the “-force” option or not.

- If a Mitigation Driver was installed

- If a Mitigation Driver was not installed

Assigning the Device to the Guest VM

The final step is to tell Hyper-V that a VM should have access to the device. In addition to the location path found above, you'll need to know the name of the vm.

What's Next

After a device is successfully mounted in a VM, you're now able to start that VM and interact with the device as you normally would if you were running on a bare metal system. This means that you're now able to install the Hardware Vendor's drivers in the VM and applications will be able to see that hardware present. You can verify this by opening device manager in the Guest VM and seeing that the hardware now shows up.

Removing a Device and Returning it to the Host

If you want to return he device back to its original state, you will need to stop the VM and issue the following:

You can then re-enable the device in device manager and the host operating system will be able to interact with the device again.

Example

Mounting a GPU to a VM

In this example we use PowerShell to configure a VM named “ddatest1” to take the first GPU available by the manufacturer NVIDIA and assign it into the VM.

Troubleshooting

If you've passed a GPU into a VM but Remote Desktop or an application isn't recognizing the GPU, check for the following common issues:

- Make sure you've installed the most recent version of the GPU vendor's supported driver and that the driver isn't reporting errors by checking the device state in Device Manager.

- Make sure your device has enough MMIO space allocated within the VM. To learn more, see MMIO Space.

- Make sure you're using a GPU that the vendor supports being used in this configuration. For example, some vendors prevent their consumer cards from working when passed through to a VM.

- Make sure the application being run supports running inside a VM, and that both the GPU and its associated drivers are supported by the application. Some applications have allow-lists of GPUs and environments.

- If you're using the Remote Desktop Session Host role or Windows Multipoint Services on the guest, you will need to make sure that a specific Group Policy entry is set to allow use of the default GPU. Using a Group Policy Object applied to the guest (or the Local Group Policy Editor on the guest), navigate to the following Group Policy item: Computer Configuration > Administrator Templates > Windows Components > Remote Desktop Services > Remote Desktop Session Host > Remote Session Environment > Use the hardware default graphics adapter for all Remote Desktop Services sessions. Set this value to Enabled, then reboot the VM once the policy has been applied.

Applies To: Windows Server 2019, Hyper-V Server 2019, Windows Server 2016, Hyper-V Server 2016, Windows Server 2012 R2, Hyper-V Server 2012 R2, Windows 10, Windows 8.1

The following feature distribution map indicates the features in each version. The known issues and workarounds for each distribution are listed after the table.

Table legend

Built in - BIS (FreeBSD Integration Service) are included as part of this FreeBSD release.

✔ - Feature available

(blank) - Feature not available

| Feature | Windows Server operating system version | 12-12.1 | 11.1-11.3 | 11.0 | 10.3 | 10.2 | 10.0 - 10.1 | 9.1 - 9.3, 8.4 |

|---|---|---|---|---|---|---|---|---|

| Availability | Built in | Built in | Built in | Built in | Built in | Built in | Ports | |

| Core | 2019, 2016, 2012 R2 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Windows Server 2016 Accurate Time | 2019, 2016 | ✔ | ✔ | |||||

| Networking | ||||||||

| Jumbo frames | 2019, 2016, 2012 R2 | ✔ Note 3 | ✔ Note 3 | ✔ Note 3 | ✔ Note 3 | ✔ Note 3 | ✔ Note 3 | ✔ Note 3 |

| VLAN tagging and trunking | 2019, 2016, 2012 R2 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Live migration | 2019, 2016, 2012 R2 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Static IP Injection | 2019, 2016, 2012 R2 | ✔ Note 4 | ✔ Note 4 | ✔ Note 4 | ✔ Note 4 | ✔ Note 4 | ✔ Note 4 | ✔ |

| vRSS | 2019, 2016, 2012 R2 | ✔ | ✔ | ✔ | ||||

| TCP Segmentation and Checksum Offloads | 2019, 2016, 2012 R2 | ✔ | ✔ | ✔ | ✔ | ✔ | ||

| Large Receive Offload (LRO) | 2019, 2016, 2012 R2 | ✔ | ✔ | ✔ | ✔ | |||

| SR-IOV | 2019, 2016 | ✔ | ✔ | ✔ | ||||

| Storage | Note1 | Note 1 | Note 1 | Note 1 | Note 1 | Note 1,2 | Note 1,2 | |

| VHDX resize | 2019, 2016, 2012 R2 | ✔ Note 6 | ✔ Note 6 | ✔ Note 6 | ||||

| Virtual Fibre Channel | 2019, 2016, 2012 R2 | |||||||

| Live virtual machine backup | 2019, 2016, 2012 R2 | ✔ | ✔ | |||||

| TRIM support | 2019, 2016, 2012 R2 | ✔ | ✔ | |||||

| SCSI WWN | 2019, 2016, 2012 R2 | |||||||

| Memory | ||||||||

| PAE Kernel Support | 2019, 2016, 2012 R2 | |||||||

| Configuration of MMIO gap | 2019, 2016, 2012 R2 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| Dynamic Memory - Hot-Add | 2019, 2016, 2012 R2 | |||||||

| Dynamic Memory - Ballooning | 2019, 2016, 2012 R2 | |||||||

| Runtime Memory Resize | 2019, 2016 | |||||||

| Video | ||||||||

| Hyper-V specific video device | 2019, 2016, 2012 R2 | |||||||

| Miscellaneous | ||||||||

| Key/value pair | 2019, 2016, 2012 R2 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ Note 5 | ✔ |

| Non-Maskable Interrupt | 2019, 2016, 2012 R2 | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ | ✔ |

| File copy from host to guest | 2019, 2016, 2012 R2 | |||||||

| lsvmbus command | 2019, 2016, 2012 R2 | |||||||

| Hyper-V Sockets | 2019, 2016 | |||||||

| PCI Passthrough/DDA | 2019, 2016 | ✔ | ✔ | |||||

| Generation 2 virtual machines | ||||||||

| Boot using UEFI | 2019, 2016, 2012 R2 | ✔ | ✔ | |||||

| Secure boot | 2019, 2016 |

Notes

Suggest to Label Disk Devices to avoid ROOT MOUNT ERROR during startup.

The virtual DVD drive may not be recognized when BIS drivers are loaded on FreeBSD 8.x and 9.x unless you enable the legacy ATA driver through the following command.

9126 is the maximum supported MTU size.

In a failover scenario, you cannot set a static IPv6 address in the replica server. Use an IPv4 address instead.

KVP is provided by ports on FreeBSD 10.0. See the FreeBSD 10.0 ports on FreeBSD.org for more information.

To make VHDX online resizing work properly in FreeBSD 11.0, a special manual step is required to work around a GEOM bug which is fixed in 11.0+, after the host resizes the VHDX disk - open the disk for write, and run “gpart recover” as the following.

Additional Notes: The feature matrix of 10 stable and 11 stable is same with FreeBSD 11.1 release. In addition, FreeBSD 10.2 and previous versions (10.1, 10.0, 9.x, 8.x) are end of life. Please refer here for an up-to-date list of supported releases and the latest security advisories.